I.3.Probability And Information Theory

Contents:

- Random Variables

- Probability Distributions

- Discrete Variables and Probability Mass Functions

- Continuous Variables and Probability Density Functions

- Marginal Probability

- Conditional Probability

- The Chain Rule of Conditional Probabilities

- Independence and Conditional Independence

- Expectation, Variance and Covariance

- Common Probability Distributions

- Useful Properties of Common Functions

- Bayes’ Rule

- Technical Details of Continuous Variables

- Information Theory

In many cases, it is more practical to use a simple but uncertain rule rather than a complex but certain one, even if the true rule is deterministic and our modeling system has the fidelity to accommodate a complex rule.

When we say that an outcome has a probability \(p\) of occurring, it means that if we repeated the experiment infinitely many times, then proportion \(p\) of the repetitions would result in that outcome.

This kind of reasoning does not seem immediately applicable to propositions that are not repeatable. For that cases we use degree of belief, with 1 indicating absolute certainty that the patient has the flu and 0 indicating absolute certainty that the patient does not have the flu.

So if we have a probability related directly to the rates at which events occur, this probability known as frequentist probability (deal the cards).

If probability related to qualitative levels of certainty, it’s known as Bayesian probability (have patient flue or not).

Random Variables

A random variable is a variable that can take on different values randomly. On its own, a random variable is just a description of the states that are possible; it must be coupled with a probability distribution that specifies how likely each of these states are.

Random variables may be discrete or continuous. A discrete random variable is one that has a finite or countably infinite number of states. Note that these states are not necessarily the integers; they can also just be named states that are not considered to have any numerical value. A continuous random variable is associated with a real value.

Probability Distributions

A probability distribution is a description of how likely a random variable or set of random variables is to take on each of its possible states. The way we describe probability distributions depends on whether the variables are discrete or continuous.

Discrete Variables and Probability Mass Functions

A probability distribution over discrete variables may be described using a probability mass function (PMF) (denoted as \(P\)). \(P(x)\) usually is not the same as \(P(y)\). The probability mass function maps from a state of a random variable to the probability of that random variable taking on that state. Sometimes we define a variable first, then use \(\sim\) notation to specify which distribution it follows later: \(\mathsf{x} \sim P(\mathsf{x})\).

Probability mass functions can act on many variables at the same time. Such a probability distribution over many variables is known as a joint probability distribution. \(P(\mathsf{x}=x, \mathsf{y}=y)\) denotes the probability that \(\mathsf{x}=x\) and \(\mathsf{y}=y\) simultaneously. We may also write \(P(x, y)\) for brevity.

A function \(P\) should satisfy the following properties:

- The domain of \(P\) must be the set of all possible states of \(\mathsf{x}\).

- \(\forall x \in \mathsf{x}, 0 \leq P(x) \leq 1\)

- \(\sum_{x \in \mathsf{x}} P(x) = 1\)

Continuous Variables and Probability Density Functions

When working with continuous random variables, we describe probability distributions using a probability density function (PDF) (denoted as \(p\)).

\(p\) must satisfy the following properties:

- The domain of \(P\) must be the set of all possible states of \(\mathsf{x}\).

- \(\forall x \in \mathsf{x}, p(x) \geq 0\). Note that we do not require \(p(x) \leq 1\).

- \(\int p(x)d(x) = 1\).

A probability density function \(p(x)\) does not give the probability of a specific state directly, instead the probability of landing inside an infinitesimal region with volume \(\delta x\) is given by \(p(x)\delta x\).

We often denote that \(x\) follows the uniform distribution on \([a, b]\) by writing \(x \sim U(a, b)\) or \(u(x; a, b)\).

Marginal Probability

Marginal probability - the probability distribution over the subset is known.

Suppose we know \(P(x, y)\). We can find \(P(x)\) with the sum rule: \(\forall x \in \mathsf{x}, P(x) = \sum_{y} P(x, y)\).

When the values of \(P(x, y)\) are written in a grid with different values of \(x\) in rows and different values of \(y\) in columns, it is natural to sum across a row of the grid, then write \(P(x)\) in the margin of the paper just to the right of the row.

For continuous variables, we need to use integration instead of summation: \(p(x) = \int p(x, y)dy\)

Conditional Probability

Conditional probability - probability of some event, given that some other event has happened. We denote the conditional probability that \(\mathsf{y}=y\) given \(\mathsf{x}=x\) or just \(P(y| x)\) - event \(y\) occur depends on event \(x\). This probability can be computed as:

Computing the consequences of an action is called making an intervention query. Intervention queries are the domain of causal modeling (out of the scope of this book).

The Chain Rule of Conditional Probabilities

Any joint probability distribution over many random variables may be decomposed into conditional distributions over only one variable:

This observation is known as the chain rule or product rule of probability. For example:

Independence and Conditional Independence

Two random variables \(x\) and \(y\) are independent if their probability distribution can be expressed as a product of two factors, one involving only \(x\) and one involving only \(y\):

Two random variables \(x\) and \(y\) are conditionally independent given a random variable \(z\) if the conditional probability distribution over \(x\) and \(y\) factorizes in this way for every value of \(z\):

We can denote independence and conditional independence with compact notation: \(x \bot y\) means that \(x\) and \(y\) are independent, while \(x \bot y | z\) means that \(x\) and \(y\) are conditionally independent given \(z\).

Expectation, Variance and Covariance

The expectation or expected value of some function \(f(x)\) with respect to a probability distribution \(P(x)\) is the average or mean value that \(f\) takes on when \(x\) is drawn from \(P\). For discrete variables this can be computed with a summation:

while for continuous variables, it is computed with an integral:

By default, we can assume that \(\mathbb{E}[\cdot]\) averages over the values of all the random variables inside the brackets. Likewise, when there is no ambiguity, we may omit the square brackets.

Expectations are linear, for example, \(\mathbb{E}_{x}[\alpha f(x) + \beta g(x)] = \alpha \mathbb{E}_{x}[f(x)] + \beta \mathbb{E}_{x}[g(x)]\) when \(\alpha\) and \(\beta\) not dependent on \(x\).

The variance gives a measure of how much the values of a function of a random variable \(\mathsf{x}\) vary as we sample different values of \(x\) from its probability distribution:

When the variance is low, the values of \(f(x)\) cluster near their expected value. The square root of the variance is known as the standard deviation.

The covariance gives some sense of how much two values are linearly related to each other, as well as the scale of these variables:

High absolute values of the covariance mean that the values change very much and are both far from their respective means at the same time. If the sign of the covariance is positive, then both variables tend to take on relatively high values simultaneously. If the sign of the covariance is negative, then one variable tends to take on a relatively high value at the times that the other takes on a relatively low value and vice versa. Other measures such as correlation normalize the contribution of each variable in order to measure only how much the variables are related, rather than also being affected by the scale of the separate variables

The notions of covariance and dependence are related, but are in fact distinct concepts. They are related because two variables that are independent have zero covariance, and two variables that have non-zero covariance are dependent. However, independence is a distinct property from covariance. For two variables to have zero covariance, there must be no linear dependence between them. Independence is a stronger requirement than zero covariance, because independence also excludes nonlinear relationships. It is possible for two variables to be dependent but have zero covariance. For example, suppose we first sample a real number \(x\) from a uniform distribution over the interval \([-1,1]\). We next sample a random variable \(s\). With probability \(\frac{1}{2}\), we choose the value of \(s\) to be 1. Otherwise, we choose the value of \(s\) to be −1. We can then generate a random variable \(y\) by assigning \(y=sx\). Clearly, \(x\) and \(y\) are not independent, because \(x\) completely determines the magnitude of \(y\). However, \(Cov(x, y) = 0\).

The covariance matrix of a random vector \(\textbf{x} \in \mathbb{R}^{n}\) is an \(n \times n\) matrix, such that

The diagonal elements of the covariance give the variance:

Common Probability Distributions

Bernoulli Distribution

The Bernoulli distribution is a distribution over a single binary random variable.It is controlled by a single parameter \(\phi \in [0,1]\), which gives the probability of the random variable being equal to 1. It has the following properties:

Multinoulli Distribution

The multinoulli or categorical distribution is a distribution over a single discrete variable with \(k\) different states, where \(k\) is finite. The multinoulli distribution is parametrized by a vector \(p \in [0,1]^{k-1}\), where \(p_i\) gives the probability of the i-th state. The final, k-th state’s probability is given by \(1- \mathsf{1}^T p\).Note that we must constrain \(\mathsf{1}^T p \leq 1\). Multinoulli distributions are often used to refer to distributions over categories of objects, so we do not usually assume that state 1 has numerical value 1, etc. For this reason, we do not usually need to compute the expectation or variance of multinoulli-distributed random variables.

Gaussian Distribution

The most commonly used distribution over real numbers is the normal distribution, also known as the Gaussian distribution:

The two parameters \(\mu \in \mathbb{R}\) and \(\sigma \in (0, \infty)\) control the normal distribution. The parameter \(\mu\) gives the coordinate of the central peak. This is also the mean of the distribution: \(\mathbb{E}[x] = \mu\). The standard deviation of the distribution is given by \(\sigma\), and the variance by \(\sigma^{2}\).

When we evaluate the PDF, we need to square and invert \(\sigma\). When we need to frequently evaluate the PDF with different parameter values, a more efficient way of parametrizing the distribution is to use a parameter \(\beta \in (0, \infty)\) to control the precision or inverse variance of the distribution:

The normal distribution generalizes to \(\mathbb{R}^n\), in which case it is known as the multivariate normal distribution. It may be parametrized with a positive definite symmetric matrix \(\boldsymbol{\Sigma}\):

The parameter \(\boldsymbol{\mu}\) still gives the mean of the distribution, though now it is vector-valued. The parameter \(\boldsymbol{\Sigma}\) gives the covariance matrix of the distribution. As in the univariate case, when we wish to evaluate the PDF several times for many different values of the parameters, the covariance is not a computationally efficient way to parametrize the distribution, since we need to invert \(\boldsymbol{\Sigma}\) to evaluate the PDF. We can instead use a precision matrix \(\boldsymbol{\beta}\):

We often fix the covariance matrix to be a diagonal matrix. An even simpler version is the isotropic Gaussian distribution, whose covariance matrix is a scalar times the identity matrix.

Exponential and Laplace Distributions

Exponential distribution - a probability distribution with a sharp point at \(x= 0\):

The exponential distribution uses the indicator function \(\boldsymbol{1}_{x \geq 0}\) to assign probability zero to all negative values of \(x\).

A closely related probability distribution that allows us to place a sharp peak of probability mass at an arbitrary point \(\mu\) is the Laplace distribution:

The Dirac Distribution and Empirical Distribution

In some cases, we wish to specify that all of the mass in a probability distribution clusters around a single point. This can be accomplished by defining a PDF using the Dirac delta function, \(\delta (x)\):

The Dirac delta function is defined such that it is zero-valued everywhere except 0, yet integrates to 1. Dirac function is called a generalized function that is defined in terms of its properties when integrated. We can think of the Dirac delta function as being thelimit point of a series of functions that put less and less mass on all points other than zero.

By defining \(p(x)\) to be \(\delta\) shifted by \(- \mu\) we obtain an infinitely narrow and infinitely high peak of probability mass where \(x = \mu\).

A common use of the Dirac delta distribution is as a component of an empirical distribution:

which puts probability mass \(\frac{1}{m}\) on each of the \(m\) points \(\boldsymbol{x}^{(1)}, …, \boldsymbol{x}^{(m)}\) forming a given dataset or collection of samples.

The Dirac delta distribution is only necessary to define the empirical distribution over continuous variables. For discrete variables,the situation is simpler: an empirical distribution can be conceptualized as a multinoulli distribution, with a probability associated to each possible input value that is simply equal to the empirical frequency of that value in the training set.

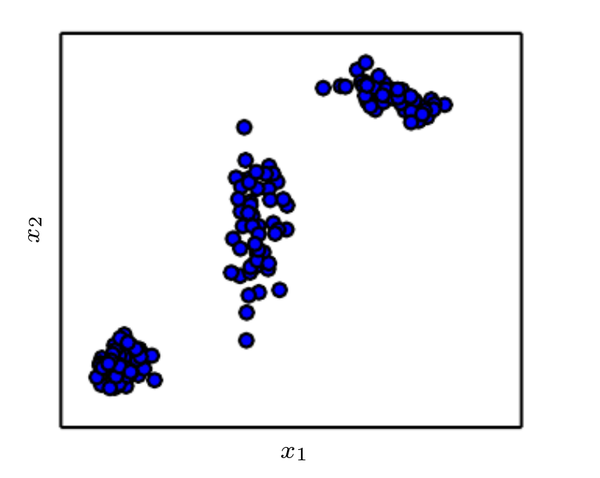

Mixtures of Distributions

One common way of combining distributions is to construct a mixture distribution. A mixture distribution is made up of several component distributions. On each trial, the choice of which component distribution generates the sample is determined by sampling a component identity from a multinoulli distribution:

where \(P(\mathrm{c})\) is the multinoulli distribution over component identities.

A latent variable is a random variable that we cannot observe directly. The component identity variable \(\mathrm{c}\) of the mixture model provides an example. Latent variables may be related to \(\mathrm{x}\) through the joint distribution, in this case, \(P(\mathrm{x}, \mathrm{c}) = P(\mathrm{x} | \mathrm{c})P(\mathrm{c})\). The distribution \(P(\mathrm{c})\) over the latent variable and the distribution \(P(\mathrm{x} | \mathrm{c})\) relating the latent variables to the visible variables determines the shape of the distribution \(P(\mathrm{x})\) even though it is possible to describe \(P(\mathrm{x})\) without reference to the latent variable.

A very powerful and common type of mixture model is the Gaussian mixture model, in which the components \(p(\boldsymbol{x} | c=i)\) are Gaussians. Each component has a separately parametrized mean \(\boldsymbol{\mu}^{(i)}\)boldsymbol{Sigma}^{(i)}`. Some mixtures can have more constraints. For example, the covariances could be shared across components via the constraint \(\boldsymbol{\Sigma}^{(i)} = \boldsymbol{\Sigma} \forall i\). As with a single Gaussian distribution, the mixture of Gaussians might constrain the covariance matrix for each component to be diagonal or isotropic.

In addition to the means and covariances, the parameters of a Gaussian mixture specify the prior probability \(\alpha_{i} = P(\mathrm{c} = i)\) given to each component \(i\). The word “prior” indicates that it expresses the model’s beliefs about \(\mathrm{c}\) before it has observed \(\boldsymbol{x}\). By comparison, \(P(\mathrm{c} | \boldsymbol{x})\) is a posterior probability, because it is computed after observation of \(\boldsymbol{x}\). A Gaussian mixture model is a universal approximator of densities, in the sense that any smooth density can be approximated with any specific, non-zero amount of error by a Gaussian mixture model with enough components

Useful Properties of Common Functions

logistic sigmoid

Logistic sigmoid is commonly used to produce the \(\phi\) parameter of a Bernoulli distribution because its range is \((0,1)\). The sigmoid function saturates when its argument is very positive or very negative, meaning that the function becomes very flat and insensitive to small changes in its input. See figure 1.

Another common function is the softplus

The softplus function can be useful for producing the \(\beta\) or \(\sigma\) parameter of a normal distribution because its range is \((0 , \infty)\).

Common properties to memorize:

Bayes’ Rule

Compute \(P(\mathrm{x} | \mathrm{y})\) from \(P(\mathrm{y} | \mathrm{x})\) if we know \(P(\mathrm{x})\):

also \(P(\mathrm{y})\) can be computed as \(P(\mathrm{x}) = \sum_{x} P(\mathrm{y} | \mathrm{x}) P(\mathrm{x})\)

Technical Details of Continuous Variables

Measure theory provides a rigorous way of describing that a set of points is negligibly small. Such a set is said to have measure zero. It is sufficient to understand the intuition that a set of measure zero occupies no volume in the space we are measuring.

Another useful term from measure theory is almost everywhere. A property that holds almost everywhere holds throughout all of space except for on a set of measure zero. Some important results in probability theory hold for all discrete values but only hold “almost everywhere” for continuous values.

Information Theory

Information theory is a branch of applied mathematics that revolves around quantifying how much information is present in a signal. If you want dive deeper you may read Cover and Thomas book or MacKay book.

The basic intuition behind information theory is that learning that an unlikely event has occurred is more informative than learning that a likely event has occurred.

- Likely events should have low information content, and in the extreme case, events that are guaranteed to happen should have no information content whatsoever.

- Less likely events should have higher information content.

- Independent events should have additive information. For example, finding out that a tossed coin has come up as heads twice should convey twice as much information as finding out that a tossed coin has come up as heads once.

In order to satisfy all three of these properties, we define the self-information of an event \(\mathrm{x} = x\) to be:

Our definition of \(I(x)\) is therefore written in units of nats. One nat is the amount of information gained by observing an event of probability \(\frac{1}{e}\). Other texts use base-2 logarithms and units called bits or shannons; information measured in bits is just a rescaling of information measured in nats.

Self-information deals only with a single outcome. We can quantify the amount of uncertainty in an entire probability distribution using the Shannon entropy:

also denoted \(H(P)\). In other words, the Shannon entropy of a distribution is the expected amount of information in an event drawn from that distribution. It gives a lower bound on the number of bits needed on average to encode symbols drawn from a distribution \(P\). Distributions that are nearly deterministic (where the outcome is nearly certain) have low entropy; distributions that are closer to uniform have high entropy. When \(x\) is continuous, the Shannon entropy is known as the differential entropy.

If we have two separate probability distributions \(P (\mathrm{x})\) and \(Q (\mathrm{x})\) over the same random variable \(\mathrm{x}\), we can measure how different these two distributions are using the Kullback-Leibler (KL) divergence:

The KL divergence has many useful properties, most notably that it is non-negative. The KL divergence is 0 if and only if \(P\) and \(Q\) are the same distribution in the case of discrete variables, or equal “almost everywhere” in the case of continuous variables. Because the KL divergence is non-negative and measures the difference between two distributions, it is often conceptualized as measuring some sort of distance between these distributions. However, it is not a true distance measure because it is not symmetric: \(D_{\mathrm{KL}}(P||Q) \neq D_{\mathrm{KL}}(Q||P)\) for some \(P\) and \(Q\).

A quantity that is closely related to the KL divergence is the cross-entropy \(H(P, Q) = H(P) + D_{\mathrm{KL}}(P||Q)\) which is similar to the KL divergence but lacking the term on the left:

Minimizing the cross-entropy with respect to \(Q\) is equivalent to minimizing the KL divergence, because \(Q\) does not participate in the omitted term.

When computing many of these quantities, it is common to encounter expressions of the form \(0\log0\). By convention, in the context of information theory, we treat these expressions as \(\lim_{x\to 0} x \log x = 0\).